Dr. Melvin Vopson, BSc, MSc, PhD, is an Associate Professor of Physics at the University of Portsmouth in the United Kingdom. With a strong background in physics, he has contributed extensively to fields such as solid-state physics, information theory, and material science. His recent paper, “The second law of infodynamics and its implications for the simulated universe hypothesis,” proposes a groundbreaking way to understand entropy by focusing on how information behaves across different systems.

Traditionally, entropy is viewed through thermodynamics, where it represents the level of disorder or randomness in a physical system, like the way heat spreads from a hot object to its cooler surroundings. According to the Second Law of Thermodynamics, entropy in an isolated system that always increases over time—leading to greater disorder. However, Dr. Vopson’s theory diverges from this by suggesting that information might not always follow this rule.

In information theory, entropy measures the uncertainty or randomness of information within a system. Dr. Vopson makes the case that information entropy can remain constant or even decrease over time, implying that information tends to become more organized or simplified under certain conditions. This is a radical departure from traditional thinking, opening up new possibilities for how we understand the role of information in both natural and artificial systems.

One of the easiest places to observe information entropy is in digital data storage. When data is stored on a medium like a hard drive or magnetic tape, it exists in a highly ordered state with bits arranged in precise sequences to convey specific information. Over time, external forces such as thermal fluctuations can cause these bits to degrade or lose their distinct patterns, leading to data loss. According to Vopson’s law, this process aligns with the idea that information entropy will decrease as the stored data gradually returns to a blank state, where no distinguishable information exists.

This concept also extends to data compression technologies. When we compress digital files, we remove redundant or unnecessary data to make the file smaller while preserving essential information. This compression process reduces information entropy by organizing the data more efficiently, which supports Vopson’s theory that information systems tend to minimize entropy over time.

The Second Law of Infodynamics isn’t just about digital data, it also has significant implications for understanding biological processes, especially genetic mutations. In biological systems, DNA serves as the blueprint for life, containing genetic instructions encoded in long sequences of nucleotides (a basic building block of DNA and RNA). Mutations or changes in these genetic sequences, can occur in several ways, including insertions, deletions, and substitutions of nucleotides.

Vopson’s theory proposes that genetic mutations do not happen randomly but instead tend to follow patterns that reduce information entropy. For example, studies of viruses like SARS-CoV-2 have shown that over time, mutations often lead to a simplification of the genetic code, either by deleting non-essential parts or by making changes that do not significantly alter the overall information content. This observation supports the idea that biological systems may also be governed by principles that seek to optimize or conserve information, rather than letting it become increasingly chaotic.

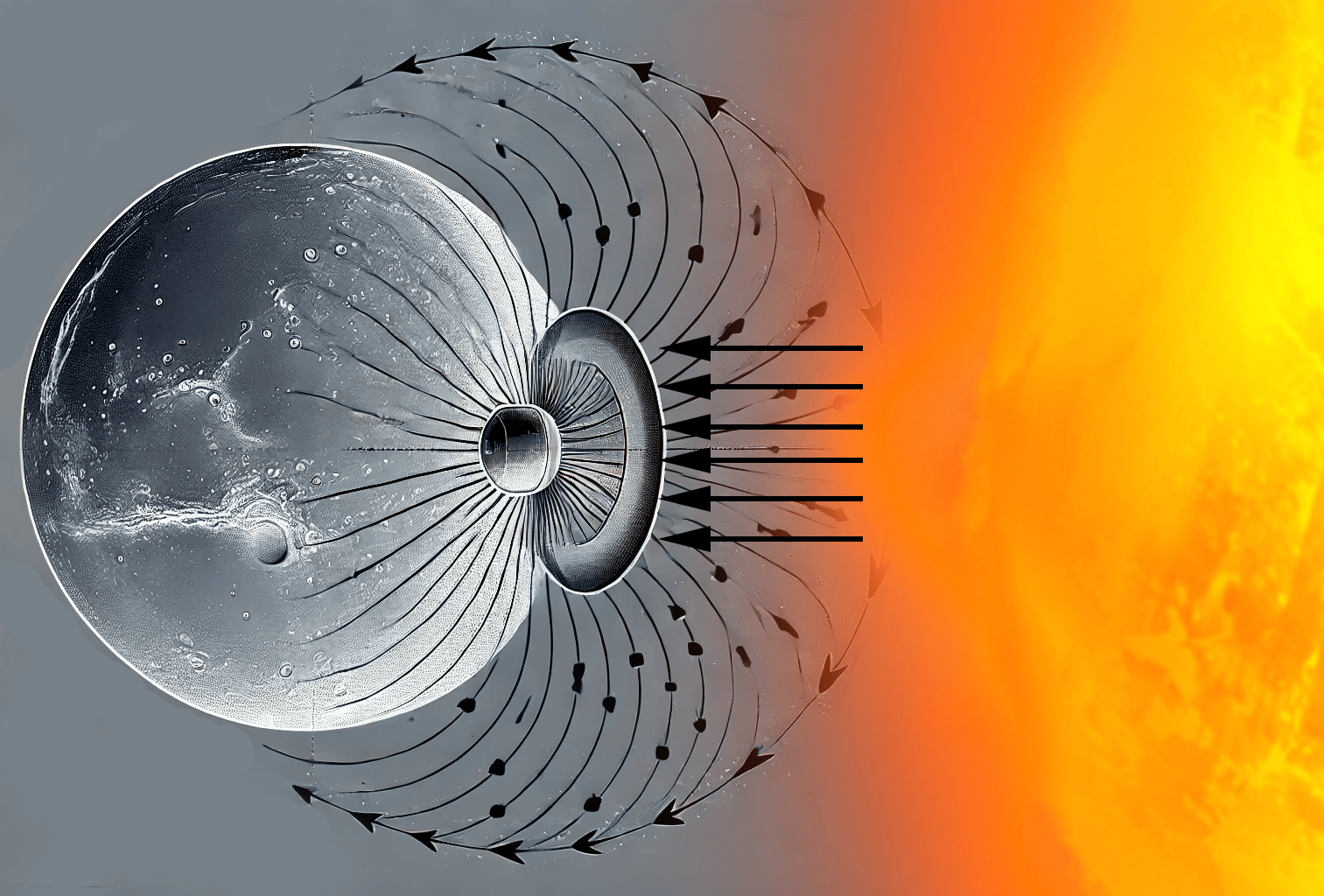

Vopson’s law also sheds light on atomic behavior and the fundamental rules that govern matter at the smallest scales. For instance, it offers an explanation for Hund’s rule, which states that electrons will fill different orbitals separately before pairing up in atoms. This behavior minimizes the energy state of the atom, which can be viewed as a reduction in information entropy. Electrons in atoms arrange themselves in ways that create the most stable, low-energy configurations, showing a preference for order over chaos.

Furthermore, quantum mechanics suggests that particles like electrons do not exist in fixed positions but in probabilistic states, where their properties are defined by probabilities rather than certainties. Vopson’s theory implies that even at this quantum level, there may be underlying principles that govern how information is distributed and maintained, potentially aligning with the idea that information entropy remains stable or decreases.

Symmetry is another key concept tied to Vopson’s theory. In nature, symmetrical patterns—from snowflakes to atomic structures—are common and represent states of low information entropy. This is because symmetrical objects have less randomness in their structure, knowing one part of a symmetrical object often tells you a lot about the rest of it. High symmetry corresponds to lower entropy since there is less information needed to describe the system fully. Vopson’s law provides a framework for understanding why nature might favor these low-entropy, highly symmetrical states.

One of the most intriguing implications of Vopson’s theory is its potential support for the “Simulated Universe Hypothesis.” If our universe were a digital construct, similar to a computer simulation, it would need to follow principles that optimize information storage and processing to maintain its structure and function. The observed tendency of information entropy to decrease or remain stable could align with what we might expect in such a computational system, where minimizing data complexity is crucial.

If our reality were designed with efficiency in mind, following rules like Vopson’s Second Law of Infodynamics, it could suggest that our universe is more like a sophisticated computer program than a purely physical system. This hypothesis challenges our conventional views of reality and opens up fascinating new avenues for scientific exploration.

The Second Law of Infodynamics has far-reaching implications for multiple fields. For genetics, it could lead to new models for predicting mutation patterns, which could impact everything from disease research to evolutionary biology. In digital technology, it may help develop more efficient data storage methods that leverage principles of information entropy. In physics and cosmology, it could offer new ways to understand the balance between order and chaos in the universe, potentially addressing unresolved questions like the “Entropic Paradox,” where the universe seems to maintain a stable total entropy despite its constant expansion.

Vopson’s theory encourages a fresh look at how information behaves across all levels of existence—from the quantum realm to biological systems to the cosmos itself. This approach could provide new insights into the fundamental workings of reality, uniting different areas of science under a common framework based on the principles of information entropy.

Dr. Melvin Vopson’s thought process and approach to his groundbreaking paper perfectly encapsulates the core mission of Newtonian Innovator—where imagination meets logic. His work exemplifies how thought experiments, which begin as theoretical speculations, can be expanded upon through rigorous scientific analysis to make substantial contributions to the scientific process. Dr. Vopson’s innovative thinking not only challenges established norms but also opens up new avenues for understanding the fundamental principles of the universe. He embodies the spirit of discovery by daring to explore uncharted territories where creative ideas and logical reasoning converge, resulting in a theory that pushes the boundaries of information theory and has profound implications across multiple scientific disciplines.

Ultimately, the Second Law of Infodynamics challenges our deepest assumptions about how the universe operates. As scientists and researchers further explore this hypothesis, we may uncover new principles that could revolutionize our understanding of reality, leading to breakthroughs that redefine the very fabric of existence.

Link to paper: https://pubs.aip.org